User Testing

A method for engaging would-be customers to learn whether our designs work in the wild.

The nuts and bolts: User testing is the process of putting a website’s, app’s, product’s, or service’s interface and functions to the test by having real users do certain tasks in realistic situations.

We can either invest in launching a product or feature and then learn whether it works in the marketplace, or break the problem down along the way and learn what people think before we put lots of time, money, and reputation on the line.

Testing lets us learn from would-be customers while product decisions are still at stake. We can identify the biggest mistakes and adapt our products faster to what people really value. Results from user tests tend to fall intoin to both quantitative (hard facts like “task failure”) and qualitative (subjective things like “loved that color”) buckets.

First, Know Which End You’re Designing For

Which kind of test we need is dictated by where we are in the design funnel. If we are in an early stage of the design funnel, we are opening up the problem and developing new concepts that need broad validation. Later in the design funnel, we’ve decided on a solution or a couple of solutions and want to make them as efficient as possible in achieving their purpose.

Let’s define those two kinds of test:

- Concept testing helps us find the right design from many. The feedback we want here is validation on positioning and desirability, so we essentially ask whether people understand our idea and want it if they do. The concepts we test tend to be broad, have only coarse detail, and get structured as very short prototypes.

- Usability testing helps us get a selected design right. Here, we focus on things such as efficiency at performing tasks and identifying issues with small details. This type of testing tends to be more detailed and refined in its concepts and has thoroughly fleshed-out prototypes.

Four Methods for Conducting a Test

Once we have decided whether we’re conducting a concept or a usability test, we need to figure out which method we want to use to run it. The primary distinction between the three comes down to location. Convenience might dictate testing with people in distant locations and context might dictate testing people in a specific environment.

Heuristic Evaluation

Heuristic evaluation is great for quick, cheap feedback.It works best with a small set of testers — say no more than 5 people — who can critique the usability of your designs. This will allow you to rapidly ferret out those pesky usability problems so you can iterate faster.

Direct Observation in a Lab Setting

This works effectively for detailed one-on-one interviews and talk-out-loud sessions focused on either concept or usability. It also tends to work best when the office or “lab” environment is not terribly different from the actual setting a user would experience while using an application or site. However, you have to make sure participants can donate enough of their time to take the tests.

Remote Testing

This is great for capturing feedback from people who don’t live nearby. With the marvelously modern video technology we have, we’re able to capture a picture of the tester’s mood and reaction. It’s almost like being in a lab setting. Again, this approach works effectively for interviews and talk-out-loud sessions focused on concept testing or usability.

Direct Observation, Field Study

Believe it or not, this has actually proved to be an important and effective tool for mobile research and testing where a lab or remote video scenario won’t do. With this approach, we’re looking to recreate a realistic setting users would experience or work in. Think about it this way, we need context about an actual physical location because it could heavily influence users’ expectations and how their ability to use mobile devices.

How to Run a User Test

Armed with the kind of test and method for conducting it, we find there are some common traits you’ll want to follow for any test. Below we outline the things you need to know to make a test run smoothly and effectively.

Getting Participants:

- Guerrilla testing approach — “The maximum benefit-cost ratio is achieved when using between three and five subjects,” wrote Jakob Nielsen. This reduces the overhead of individual tests and makes it easier to conduct them more frequently.

- Participant users — The biggest mistake is bringing in participants who would never use your product. The second biggest mistake is focusing on demographics.

- Setting incentives — The best incentive is a desire to be heard and influence something they care about. Beyond that we offer small gift cards of $10-20, often as a surprise at the end.

- Do-it-yourself recruiting — Posting specific listings to Craigslist and tapping people within our immediate or secondary network that fit basic user criteria.

- Reusing participants — Typically, we try to tap new people with each test, but find it works to bring back previous testers, given that they continue to be candid and don’t attempt to please the test giver with stock answers.

Conducting A User Test

Do a complete dry run of your test 24 hours beforehand to work out any kinks. See how it flows and make sure your equipment is properly set up. Be sure to include your actual test setting in your dry run. This ensures you have room for your test equipment and that your test script works well in that environment. Common issues, however, are bad lighting, noise, or unwelcome distractions.

Client or stakeholder participation is OK. However, they could be disruptive. Ensure that they know to be passive observers only and have them steer clear of test takers.

Do NOT allow stakeholders to make tweaks during the test, as this will quickly bias your results. If there are concerns whether a certain test item is effective, you can always organize another quick test two days later.

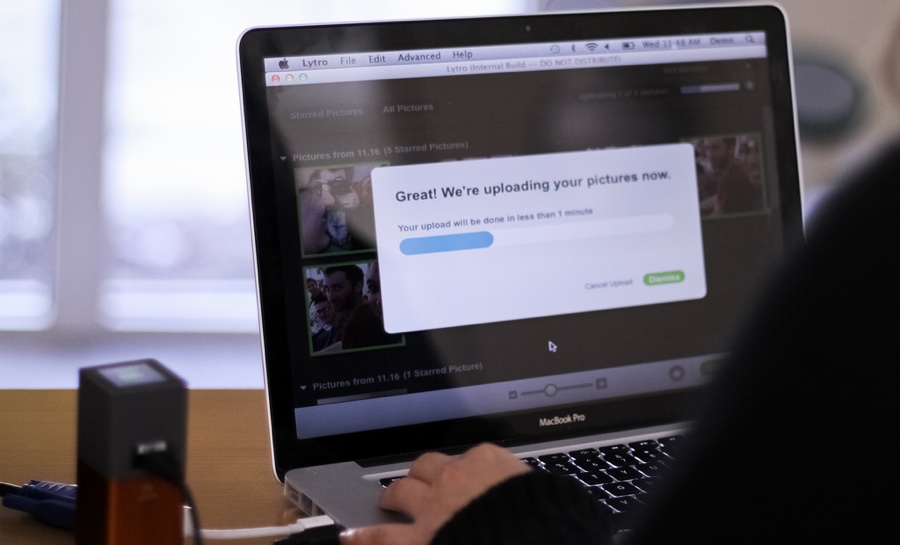

Our standard toolset for web app testing includes a laptop with built-in camera, ZOOM for screen and video recording, and a familiar mouse so your participant doesn’t get stuck operating the machine. For recording mobile or physical device tests, we use tripods with smartphones or digital cameras to grab snippets of video, which we later analyze and share later.

Script out your test and leave room for shorthand notes alongside your questions. It is best to keep these concise in bulleted lists. You won’t have time to read much from the paper when conducting the actual test.

Beginning the User Testing

Welcoming participants and providing a waiting area for people who arrive early are important, but often overlooked when it comes to making participants comfortable.

Don’t forget the forms, such as nondisclosure agreements, consent forms for minors, or any other legally required documents, that might apply to the confidentiality of your work. Have these printed and be ready to quickly explain them to your participants so they understand what is at stake.

Confirm their background information to ensure they are representative users and to understand more context for their motivations and expectations.

During The Test

Timing is super key and very hard to get right. The practice dry run should give you a sense of flow and pacing. Have a teammate keep track of time and nudge you along.

Offer a short introductory icebreaker. You want to disarm participants so they feel comfortable and natural giving you answers. Test worksheets should be outlines that allow testers to jot down notes about responses on the fly. Often these worksheets are a truncated shorthand form of the test script itself.

Recording participant experiences should focus on noting a few key aspects:

Noticing friction points where people hesitate, do something unexpected, get something wrong or get stuck.

Recognizing conflict between their mental model and that of your application.

Work to stay on script, but don’t be afraid to dig into a discovery based on a friction point or conflicting mental model.

If using ZOOM to record the screen session, mark highlight points to come back to later for reference or as video clips to give in a summary later.

Summarizing memories focusing on the peak-end rule that states that people remember the highs, lows, and ends of things and forget most other details. We tally up the highs, lows, and final tasks or experiences from each participant to gauge how people are most likely to remember their experience.

Check out the video below for how we conducted a user test for an ancestry website:

Ending The User Test & Afterwards:

Ending on time is important for obvious reasons. You can’t afford to run into the next test for one. For another, you want to avoid letting test fatigue set in.

Thanking them and seeing them off is not only the polite thing to do, it ensures that they might make themselves available for future tests.

Gifting and compensation in the form of a small gift card always comes at the end when thanking them for their participation. Ask if they’d be willing to participate again if you feel their feedback on future tests would seem valuable.

Following up with a ‘thank you’ email is one last way to leave a participant with a positive experience.

Summarizing Results

Process: Reviewing test videos can be a huge time suck! Only use this to fill in holes in data you were unable to record during the session and to verify answers you were uncertain about. When you do this, be sure to use time marks from Silverback or your own notes to help speed up your process.

Facial expressions and emotional cues can be windows into people’s true feelings about what they are seeing. Add qualitative marks for patterns you see that might suggest emotional contradictions in the quantitative data.

Aggregating responses should take the form of simple tallies related to either task performance (success/failure) or sentiment (like/indifferent/dislike).

Presentation: Worksheet summaries should follow a similar format as the test worksheet, aggregating responses in an even more condensed form. The goal is to inform nonparticipants about the results as efficiently as possible.

Video should be used sparingly to underscore common patterns for problems or opportunities found during the test. We often treat this as a highlight reel. Take the care to let the video clips map to the actual findings so you don’t bias viewers toward a different sense of the results.

Marking up screenshots or flows is another great way of representing findings about user behavior directly on the product. This can up the contrast on findings and make them much clearer and easier to act on.

Present within 2 days of the last test to avoid over documenting and to keep interest from the team piqued.

Get The Feedback You Need

You may not want to hear the constructive feedback your potential users might have, but it’s absolutely crucial to see that your ideas and concepts fit within your overall vision for a project. User testing is just one step in getting that feedback. After all, we all love good feedback. So get testing!